Drawing to Real

overfit

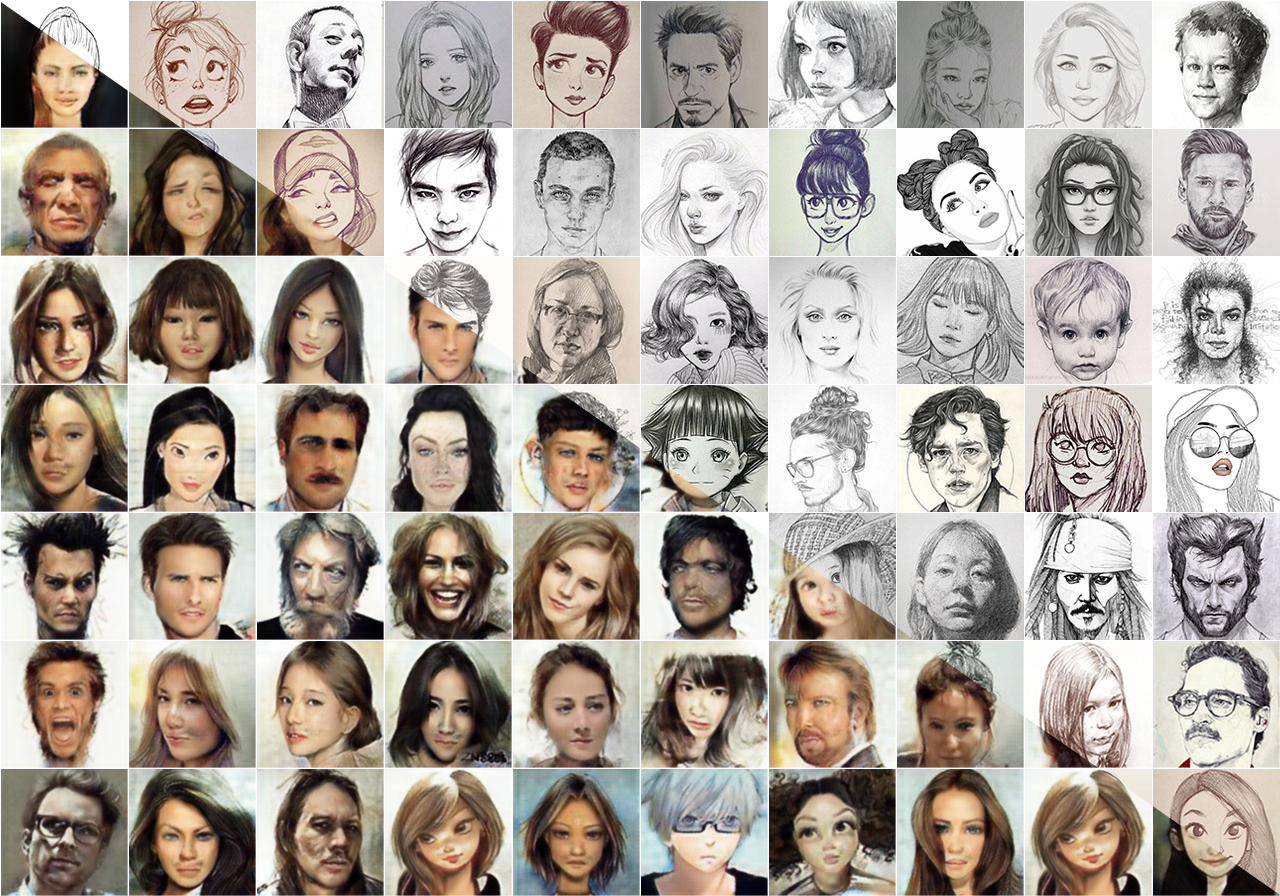

Overfitting a drawing to look like a real face, show an interesting result, the genreral expression is kept while the features which cant be matched are overwritten.

Intro

(Artificial) Neural networks have been out for a good while, but recently they had a big expansion in it's aplications and results quality. With the advent of convolutional neural networs and deep learning many new applications with images came out and achieved impresive results in tasks like detection, classification and synthesis. Those are bound to advances in the theory, creativity, the computational power and the available data to learn from.

The predecessor of all that was the basic unit called perceptron which dates from 1957. It was basicaly a weighted sum of its inputs with a threshold function applied in the output, where the weights are learned from the data. Today the principle still some what the same, but in a much lager scale, many basic units grouped in layers, many layers (depth) grouped in networks and networks working together or against each other. The basic unit now is more general accepting convolution filters as weights, many different functions applied to the output of each unit and others procedures in the between like pooling. Those alone improved the handling of imges by neural networks for the aforementioned tasks, but the inflection point was the creativity in new layouts and ways of using various networks together.

The most interesting comes from the creativity in using such a simple model, ideas like stacking many layers, changing the forward process (skipping layers), downsampling and upsampling in the layers and even coupling networks in non-trivial ways (GANs).

One question still persists, how to learn from data? It follows the general idea of human learning, you give a labeled example, ask for an output from the example, does it match the label? If so you tell it's good otherwise you adjust the weights according. This is done as an iterative optimization process. You feed forward your data obtaining a result, with some metric or complicated function you describe how good or bad, (the error), is that result from the expected, then you backpropagate the error adjusting the weights of all units to decrease the error.

All of the above is a drop of water in the big ocean which is the deep learning today, but so far it's a small non-technical introduction.

Generating images with same content but different style

Image translation

overfit

Overfitting a drawing to look like a real face, show an interesting result, the genreral expression is kept while the features which cant be matched are overwritten.

Underfit

In the other hand, translating real faces to drawing ends in a realistic drawing, the general trace and geometry are not changed.